How to build a linguistically valuable corpus from the web

The web is a great source of readily available textual data but also a limitless warehouse of spam, machine-generated content and duplicated content unsuitable for linguistic analysis. This may generate some uncertainty about the quality of the language included in the corpora from the web.

At Sketch Engine, we are very well aware of the problems associated with building web corpora. This is why we never include blindly just anything that the web offers. Typically, we will discard between 40 % and 60 % of the textual content we download. The data which are unsuitable for linguistic analysis are identified using a sophisticated procedure with a special focus on the following issues.

Duplicated content

It is not uncommon that identical or nearly identical content is found on several websites or even on different pages of the same website. For example, media conglomerates often post the same news article, sometimes with minor changes or in a shortened or extended version, on all the sites they own. Similarly, travel agencies often include tourist resort descriptions on their websites. However, these descriptions are typically not written from scratch but copied, and sometimes slightly adapted, from other tourism websites.

The fact that the same text appears several times on the web does not mean that it was written several times. Including each instance of the article in a general language corpus would distort the information derived from the corpus about how language is used. To put it simply, the corpus would show that the language in the duplicated content is used more frequently than it really is. It is extremely easy for a corpus from the web to suffer from this issue which makes it useless.

How is duplicated content avoided

Sketch Engine uses a deduplication procedure which is able to detect perfect duplicates as well as texts which were slightly adapted, shortened or extended. This means that if a text is shared with only minor changes, only one instance is kept in the corpus. The deduplication is carried out at paragraph level. This means that whole paragraphs are compared for similarity. If two paragraphs anywhere in the corpus are identified as identical, one of the will be removed. Therefore, it is possible that the web corpus may contain documents where one or more paragraphs may be missing.

Deduplication with user corpora

When users build their own corpora in Sketch Engine using the built-in WebBootCaT tool, deduplication is deactivated by default because there are many scenarios when the user may need to include repetitive content. For example, the user might want to analyse how many times the same piece of news appeared in the corpus.

The deduplication can be activated during corpus compilation (use Expert settings) and it is also possible to select the level at which it will be carried out. This can be the sentence level, paragraph level, document level or any other structure can be used. For example, with the sentence level selected, individual sentences will be compared and if identical sentences are found, all but one will be removed from the corpus.

Unwanted content

The internet is full of textual content which has hardly any linguistic value (unless one wants to study this type of text specifically). This may include:

- texts made up of incomplete sentences (post comments, reviews and discussions)

- on-page advertisements

- repetitive content found on each webpage of a site (navigation menus, top menus, end matter text, legal clauses)

- text snippets (beginnings of posts or pages inviting the user to read the complete article or page)

The above-mentioned types of spam are eliminated with JusText, a specialized tool able to identify and remove this content from a downloaded web page. This tool is also applied to user corpora when using the integrated corpus building tool with the web option.

Applying the JusText tool onto the following pages will remove everything but the content marked in green. Nothing will make it into the corpus from the first web page because no piece of text is long enough to provide the necessary context for linguistic analysis.

A home page of a news site with only news headlines or news snippets without sufficient context. Nothing will be included in the corpus.

The main body of a blog post or news article will be included in the corpus, the remaining content will be ignored.

Spam

By spam we refer to text found on the internet which was not produced by a human or which was only produced once but was automatically replicated on many other places on the web. Spam may include:

- texts whose abundance on the internet is highly disproportionate to their frequency outside the internet (pornographic and adult sites, sites selling products for slimming, muscle gain, hair growth and other health products). These sites often get duplicated automatically on various URLs which increases their presence even further.

- machine-generated text, often not intended to communicate any meaningful information

- machine-translated texts

The presence of spam in our web corpora is partly eliminated by deduplication. In the worst case scenario, a maximum of 1 copy of each page will be present in the corpora. However, the main method of avoiding spam in our corpora is the use of seed URLs.

Seed URLs

The process of web crawling is not completely random. Before the crawling starts, a list of respectable, high-quality websites is compiled and the web crawler starts by downloading the content of these seed URLs. They can be media sites, blogs, professional sites and also other sites from which we downloaded good content in the past. If a link leading to another website is found, the web crawler will follow the link but it will only continue doing so up to a previously defined level. Since most of the unwanted web content is in English, the level has to be set low when building an English web corpus. It can be higher for other major languages and it can be even higher for less major languages where the danger of reaching spam is much lower.

Seed URLs cannot be used when building user corpora with the built-in WebBootCaT tool. They are only used in web corpus building carried out by the Sketch Engine team. Users can, however, build a corpus by downloading websites one at a time by using WebBootCaT with the website option.

Additional criteria

The process of web crawling to obtain a general language web corpus also include various additional criteria.

Length

A document is only kept in the corpus if the downloaded web page contains enough data after applying the above cleaning tools. If the document is too short, for example, one sentence only, the document will not be included because a lone sentence out of context is rarely linguistically valuable. On the other hand, if the document is too long, for example, many thousands of words, it might be an indicator that the content is not a standard webpage or that the content may not be of linguistic nature at all. Such documents are also not included.

When building user corpora with the built-in WebBootCaT tool, these parameters can be set to different values or even disabled to include absolutely all text in the corpus.

Language detection

During web crawling, the language of the downloaded text is detected and only texts in the desired language are included. This means that an English corpus can contain pages published on German, Spanish, French, Japanese and other websites as long as they are in English.

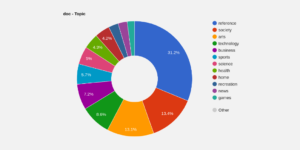

Where are the texts from?

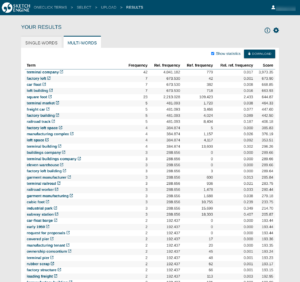

Despite the use of seed URLs as the starting points for the web crawling, it is not easy to generalise and give a simple answer to this question. However, each document (a downloaded web page) in the corpus comes with metadata such as the source website as well as the exact URL from which the text was downloaded. The user can generate a list of all the websites or URLs together with the number of documents or tokens downloaded from each source. This can provide some insight into where the data come from.

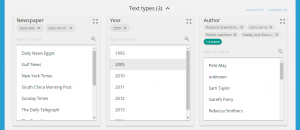

Similarly, it is possible to display this information for each concordance line or to narrow the search to only certain websites so the user is always in control of where the results come from. Text types or subcorpora are the functionalities designed to achieve this.

How to build your own web corpus

There is little point in building your own general purpose web corpus because there is plenty of them in Sketch Engine already, the largest ones have a size of 40 billion words and the Timestamped corpus in 18 languages is even updated daily.

If you need to build a specialized corpus, use the built-in web corpus building tool with one or more of these options:

- build a corpus from a web search

- build a corpus from web links

- download a website.

For geeks

Most tools integrated into Sketch Engine into its web crawling pipeline and into the WebBootCaT tool are open source and can be downloaded individually from http://corpus.tools Their installation, configuration and use may, however, require advanced IT skills. It is generally far more effective to work with the corpora preloaded in Sketch Engine or to use built-in corpus building tool.